Recommendation System by Siamese Network

Recommendations using triplet loss

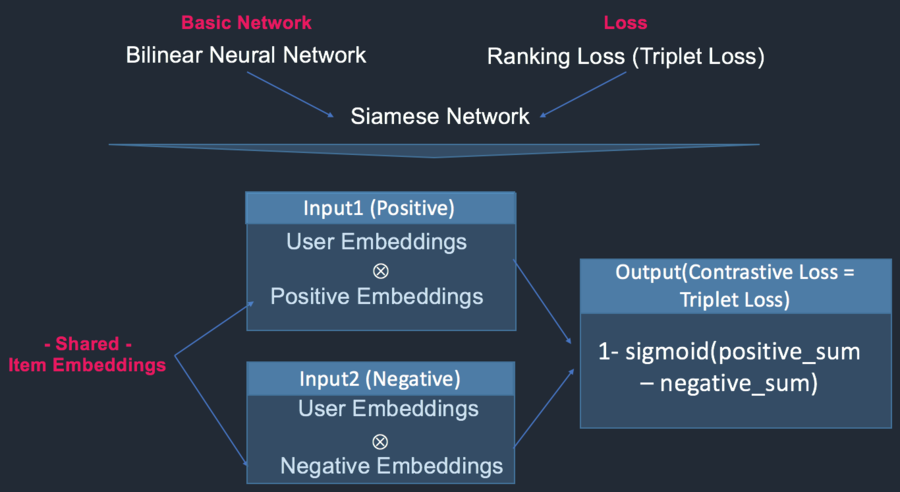

When both positive and negative items are specified by user, recommendation based on Siamese Network can account such preference and rank positive items higher than negative items. To implements this, I transformed maciej’s github code to account for user specific negative preference. Just as word2vec idea (matrix multiplication that transforms words into corresponding word embeddings), latent factor matrix can be represented by embedding layers. The original idea is building Bilinear Neural Network and Ranking Loss(Triplet Loss), and combine them into Siamese Network architecture siames_blog. The triplet is user, positive items, and negative items.

The below picture demonstrates the way to construct the architecture. Positive and negative item embeddings share a item embedding layer, and each of them are multiplied by a user embedding layer. Although the original code used Movielens100k dataset and randomly selects negative items randomly, which can lead negative items to contain some positive items, I set the negative items with score less than 2 and positive items greater than 5.

Since negative and positive embedding layers share the same item embedding layer, the size of them should be equal. To suffice the deficient amount of negative items, I randomly select items from the recordings 3 and put them into negative items. Finally, The network is built upon Keras backedn Tensorflow. The final outcomes with 20 epcoh shows 82% of AUC(Area Under Curve of ROC curve).

Specific implementation

Data import

import numpy as np

import itertools

import os

import requests

import zipfile

import scipy.sparse as sp

from sklearn.metrics import roc_auc_score

def download_movielens(dest_path):

url = 'http://files.grouplens.org/datasets/movielens/ml-100k.zip'

req = requests.get(url, stream=True)

with open(dest_path, 'wb') as fd:

for chunk in req.iter_content():

fd.write(chunk)

def get_raw_movielens_data():

path = '/path/to/data/saved/movielens.zip'

if not os.path.isfile(path):

download_movielens(path)

with zipfile.ZipFile(path) as datafile:

return (datafile.read('ml-100k/ua.base').decode().split('\n'),

datafile.read('ml-100k/ua.test').decode().split('\n'))Data Pre-Processing

def parse(data):

for line in data:

if not line:

continue

uid, iid, rating, timestamp = [int(x) for x in line.split('\t')]

yield uid, iid, rating, timestamp

def build_interaction_matrix(rows, cols, data):

mat_pos = sp.lil_matrix((rows, cols), dtype=np.int32)

mat_neg = sp.lil_matrix((rows, cols), dtype=np.int32)

mat_mid = sp.lil_matrix((rows, cols), dtype=np.int32)

for uid, iid, rating, timestamp in data:

if rating >= 5.0:

mat_pos[uid, iid] = 1.0

elif rating <= 2.0:

mat_neg[uid, iid] = 1.0

elif rating == 3.0:

mat_mid[uid, iid] = 1.0

possum = mat_pos.sum(); negsum = mat_neg.sum();

# Sampling negative index not included from positive & negatively indicated one

# Sampling deficient amount from the mat_mid value == 1

b = mat_mid.toarray() == 1.0

index = np.column_stack(np.where(b))

# Shuffle allindex and get the first N(deficient) amount location

np.random.shuffle(index)

if negsum < possum:

deficient = (possum - negsum)

allindex = index[:deficient, ]

# Asgign value into mat_neg where mat_mid occurs

for idx, val in enumerate(allindex):

mat_neg[val[0] , val[1]] = 1.0

possum = mat_pos.sum(); negsum = mat_neg.sum()

if possum != negsum:

print("Number of Positive and negative doesn't match!!")

pass

elif possum > negsum:

deficient = (negsum - possum)

allindex = index[:deficient, ]

# Asgign value into mat_neg where mat_mid occurs

for idx, val in enumerate(allindex):

mat_pos[val[0], val[1]] = 1.0

return ( mat_pos.tocoo(), mat_neg.tocoo() )

def get_movielens_data():

train_data, test_data = get_raw_movielens_data()

uids = set()

iids = set()

for uid, iid, rating, timestamp in itertools.chain(parse(train_data),parse(test_data)):

uids.add(uid)

iids.add(iid)

rows = max(uids) + 1

cols = max(iids) + 1

train_pos , train_neg = build_interaction_matrix(rows, cols, parse(train_data))

test_pos , test_neg = build_interaction_matrix(rows, cols, parse(test_data))

return (train_pos , train_neg, test_pos , test_neg)Keras Model Implementation

from keras import backend as K

from keras.models import Model, Sequential

from keras.layers import Embedding, Flatten, Input, Convolution1D ,merge

from keras.optimizers import Adam

def bpr_triplet_loss(X):

positive_item_latent, negative_item_latent, user_latent = X

# BPR loss

loss = 1.0 - K.sigmoid(

K.sum(user_latent * positive_item_latent, axis=-1, keepdims=True) -

K.sum(user_latent * negative_item_latent, axis=-1, keepdims=True))

return loss

def identity_loss(y_true, y_pred):

return K.mean(y_pred - 0 * y_true)

def build_model(num_users, num_items, latent_dim):

# Input

positive_item_input = Input((1, ), name='positive_item_input')

negative_item_input = Input((1, ), name='negative_item_input')

user_input = Input((1, ), name='user_input')

# Embedding

# Shared embedding layer for positive and negative items

item_embedding_layer = Embedding( num_items, latent_dim, name='item_embedding', input_length=1)

positive_item_embedding = Flatten()(item_embedding_layer(positive_item_input))

negative_item_embedding = Flatten()(item_embedding_layer(negative_item_input))

user_embedding = Flatten()(Embedding( num_users, latent_dim, name='user_embedding', input_length=1)(user_input))

# Loss and model

loss = merge(

[positive_item_embedding, negative_item_embedding, user_embedding],

mode=bpr_triplet_loss,

name='loss',

output_shape=(1, ))

model = Model(

input=[positive_item_input, negative_item_input, user_input],

output=loss)

model.compile(loss=identity_loss, optimizer=Adam())

return model